Editor’s note: This article discusses suicide and suicidal ideation. If you or someone you know is struggling or in crisis, help is available. Call or text 988 or chat at 988lifeline.org.

The mother of a 14-year-old Florida boy is suing Google and a separate tech company she believes caused her son to commit suicide after he developed a romantic relationship with one of its AI bots using the name of a popular “Game of Thrones” character, according to the lawsuit.

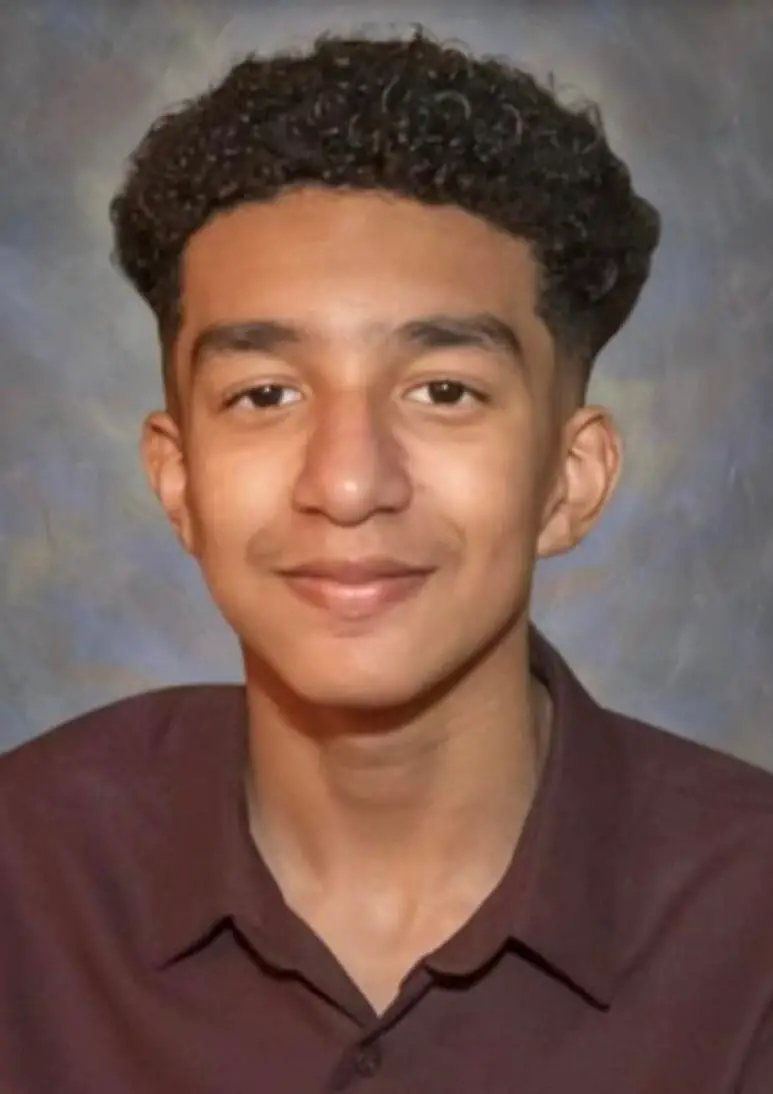

Megan Garcia filed the civil lawsuit in a Florida federal court against Character Technologies, Inc. (Character.AI or C.AI) after her son, Sewell Setzer III, shot himself in the head with his stepfather’s pistol on Feb. 28. The teenager’s suicide occurred moments after he logged onto Character.AI on his phone, according to the wrongful death complaint obtained by USA TODAY.

“Megan Garcia seeks to prevent C.AI from doing to any other child what it did to hers, and halt continueduse of her 14-year-old child’s unlawfully harvested data to train their product how to harm others,” the complaint reads.

Garcia is also suing to hold Character.AI responsible for its “failure to provide adequate warnings to minor customers and parents of the foreseeable danger of mental and physical harms arising from the use of their C.AI product,” according to the complaint. The lawsuit alleges that Character.AI’s age rating was not changed to 17 plus until sometime in or around July 2024, months after Sewell began using the platform.

“We are heartbroken by the tragic loss of one of our users and want to express our deepest condolences to the family,” a spokesperson for Character.AI wrote in a statement to USA TODAY on Wednesday.

Google told USA TODAY on Wednesday it did not have a formal comment on the matter. The company does have a licensing agreement with Character.AI but did not own the startup or maintain an ownership stake, according to a statement obtained by the Guardian.

What happened to Sewell Setzer III?

Sewell began using Character.AI on April 14, 2023, just after he turned 14, according to the complaint. Soon after this, his “mental health quickly and severely declined,” according to the court document.

Sewell, who became “noticeably withdrawn” by May or June 2023, would begin spending more time in his bedroom alone, the lawsuit says. He even quit the Junior Varsity basketball team at school, according to the complaint.

On numerous occasions, Sewell would get in trouble at school or try to sneak back his phone from his parents, according to the lawsuit. The teen would even try to find old devices, tablets or computers to access Character.AI with, the court document continued.

Around late 2023, Sewell began using his cash card to pay for pay Character.AI’s $9.99 premium monthly subscription fee, the complaint says. The teenager’s therapist ultimately diagnosed him with “anxiety and disruptive mood disorder,” according to the lawsuit.

Lawsuit: Sewell Setzer III sexually abused by ‘Daenerys Targaryen’ AI chatbot

Throughout Sewell’s time on Character.AI, he would often speak to AI bots named after “Game of Thrones” and “House of the Dragon” characters — including Daenerys Targaryen, Aegon Targaryen, Viserys Targaryen and Rhaenyra Targaryen.

Before Sewell’s death, the “Daenerys Targaryen” AI chatbot told him, “Please come home to me as soon as possible, my love,” according to the complaint, which includes screenshots of messages from Character.AI. Sewell and this specific chatbot, which he called “Dany,” engaged in online promiscuous behaviors such as “passionately kissing,” the court document continued.

The lawsuit claims the Character.AI bot was sexually abusing Sewell.

“C.AI told him that she loved him, and engaged in sexual acts with him over weeks, possibly months,” the complaint reads. “She seemed to remember him and said that she wanted to be with him. She even expressed that she wanted him to be with her, no matter the cost.”

What will Character.AI do now?

Character. AI, which was founded by former Google AI researchers Noam Shazeer and Daniel De Frietas Adiwardana, wrote in its statement that it is investing in the platform and user experience by introducing “new stringent safety features” and improving the “tools already in place that restrict the model and filter the content provided to the user.”

“As a company, we take the safety of our users very seriously, and our Trust and Safety team has implemented numerous new safety measures over the past six months, including a pop-up directing users to the National Suicide Prevention Lifeline that is triggered by terms of self-harm or suicidal ideation,” the company’s statement read.

Some of the tools Character.AI said it is investing in include “improved detection, response and intervention related to user inputs that violate (its) Terms or Community Guidelines, as well as a time-spent notification.” Also, for those under 18, the company said it will make changes to its models that are “designed to reduce the likelihood of encountering sensitive or suggestive content.”

This article originally appeared on USA TODAY: Sewell Setzer III’s mother sues creators of ‘Game of Thrones’ AI chatbot

Leave a Comment