PCMag editors select and review products independently. If you buy through affiliate links, we may earn commissions, which help support our testing.

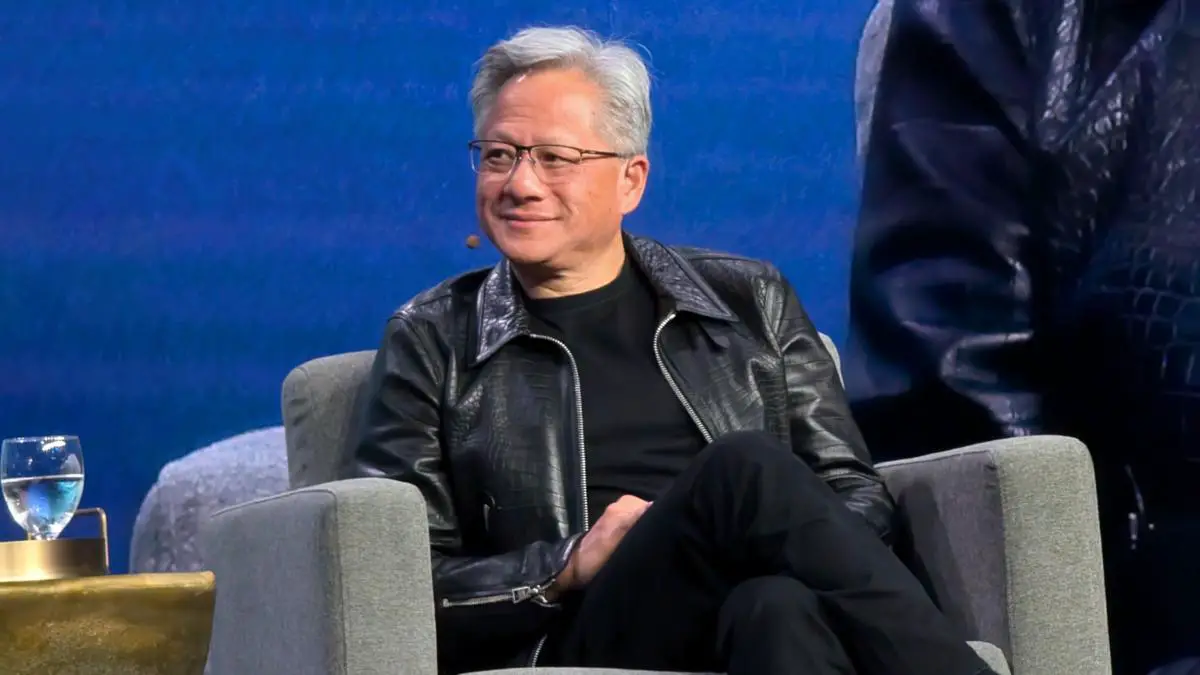

Nvidia Founder and CEO Jensen Huang was at the Gartner Symposium this week to share his approach to leadership and urge the audience to try AI applications and upgrade their infrastructure to accelerated computing.

“I’ve had the benefit of longtime experimentation,” Huang told Gartner Fellow Daryl Plummer, noting that he’s been doing his job for almost 33 years, longer than any tech CEO.

He’s been able to experiment with management techniques and argued that a long-term perspective is imperative. Fourteen years ago, for example, Nvidia first saw deep learning show up as a workload that needed to be accelerated by its GPUs.

“Researchers came to us and asked us about how we could help and we created probably one of the most important domain-specific libraries the world has ever seen called Cu-DNN, which accelerated neural networking,” Huang said. That runtime made it possible for every framework to be built on top of Nvidia’s CUDA architecture and the many libraries that run on top of that.

Importantly, Nvidia saw something most others didn’t, which is that AlexNet was much more consequential and profound than the initial computer vision applications. Nvidia felt that it was a very scalable technique that enabled it to approximate almost any function. If it could discover a universal function that changed the way it developed and ran software, the whole stack would be reinvented. That turned out to have been true, Huang said.

“The leadership moment is when you see something impactful and something unexpected, and you have to ask yourself, what does this mean, and what’s the impact long-term?” Huang said.

Nvidia broke everything down to the point where it realized that every aspect of computing would be changed, but only if the company took action.

“It’s surprisingly easier to live in the future than it is to live in the past,” Huang said, noting that once Nvidia was able to imagine what that future looked like, it could make it happen.

He noted that Nvidia has amazing computer scientists and the willpower to make this happen. There were things the company needed to learn, but he said, “You can’t learn any of it if you don’t lean forward and you don’t decide to go do it.” The hardest part is deciding to do something, even though you know you will make mistakes and get hurt.

Huang talked about how his firm went from coding software to machine learning software to AI. He said the first thing Nvidia built were tools for its software development, which led to AI systems that can learn how to do things by observing them. Now, he said, it has AI systems that are learning how to reason by taking a problem statement and breaking it down into tasks. This has created a multi-trillion-dollar industry called AI.

He also talked about building systems that are really good at transforming the raw material—data—into this new invisible thing that is monetized by millions of tokens per dollar. (He explained that tokens are floating point numbers—often equivalent to about three-fourths of a word—that can be reconstituted into language, videos, or images. And that will lead to physical things such as robotics articulation or tokenized versions of proteins and chemicals.

“This is the beginning of a new Industrial Revolution,” he said, no different than 300 years ago when somebody created the Dynamo, which produced electricity.

The question for all of us is how this change manifests in all of our companies. At Nvidia, he said, it was first designing tools to create AI and then creating tools to help design chips, software, and supply chain management. The company plans to have 50,000 employees with over 100 million AI assistants and suggests other companies will do the same.

Huang currently uses multiple AIs such as ChatGPT, Perplexity, and Notebook LM, and suggested putting the conference keynotes in the system and having it summarize them.

He ended up talking about the concept of an “AI Factory.” The reason AI started in the cloud was that it required a reinvention of the computing stack, and that was easier to provide as a service. But now, he said, all of that capacity has been remanifested for on-premise use, and we need to turn every platform into an AI platform. The first step is to vectorize the database, so it can do retrieval-augmented generation (RAG). The next step, he said, will be “agentic AI” and this too is being designed to run on-premise as well as in the cloud.

Huang predicted that we’ll soon have digital employees that will need to be onboarded and taught new skills, corporate values, and the taxonomy of the culture. Like humans, they will be evaluated and will have guardrails.

We need to create more AI jobs first and then we can enable more human jobs, because more AI jobs will create more productive companies with higher earnings, which will let them hire more people. He noted that AIs might be able to do five, 10, 20, or even 80% of a particular job, but not all of it.

Huang finished by pushing for CIOs to build accelerated data centers. If you track the data, you will see that data processing continues to double approximately every year, but Moore’s Law—the doubling of CPU performance—started to slow down about 10 years ago. Therefore, he said, if your computing demand continues to grow exponentially but general-purpose computing does not, you should expect computing cost inflation and increased energy costs. Therefore, you should use acceleration (by which he meant mostly GPUs) wherever you can, from video transcoding to SQL processing to weather forecasting.

This new paradigm shift is happening in all industries, Huang said, and “the most important thing is just to get started.”

Leave a Comment